Key findings from our survey report on the top factors inhibiting and contributing to success with LLMs

In the world of AI, Large Language Models (LLMs) dominate today’s conversation. When ChatGPT, OpenAI’s LLM-powered chatbot, launched in November of 2022, it ignited a wildfire of interest in the underlying LLM technology that powers the application. These novel models marked a new achievement in AI with robust conversational abilities that could tackle a seemingly endless number of questions through natural language prompts. As such, every enterprise over the last year has been scrambling to find out how to leverage LLMs to gain a competitive edge.

Download the full survey report: https://pbase.ai/LLMsurvey2023.

To better understand the opportunity for LLMs and, more importantly, what it takes to be successful, we surveyed over 150 executives, data scientists, machine learning engineers, developers, and product managers in 29 countries on their use of LLMs. In the report we cover a broad range of topics, including:

- Motivations for investing in LLMs and the top use cases,

- Critical challenges faced when deploying LLMs into production,

- The growing interest in and opportunity for open-source LLMs,

- Methods for customization with a deep dive into fine-tuning.

This blog, and the full research report, aim to provide teams experimenting with LLMs with insights into how they can build the right technology foundation and strategy to extract the most value out of their LLM investments. Let's dive into a high-level summary of some of our findings!

The Future is Open: Open-source vs. Commercial LLMs

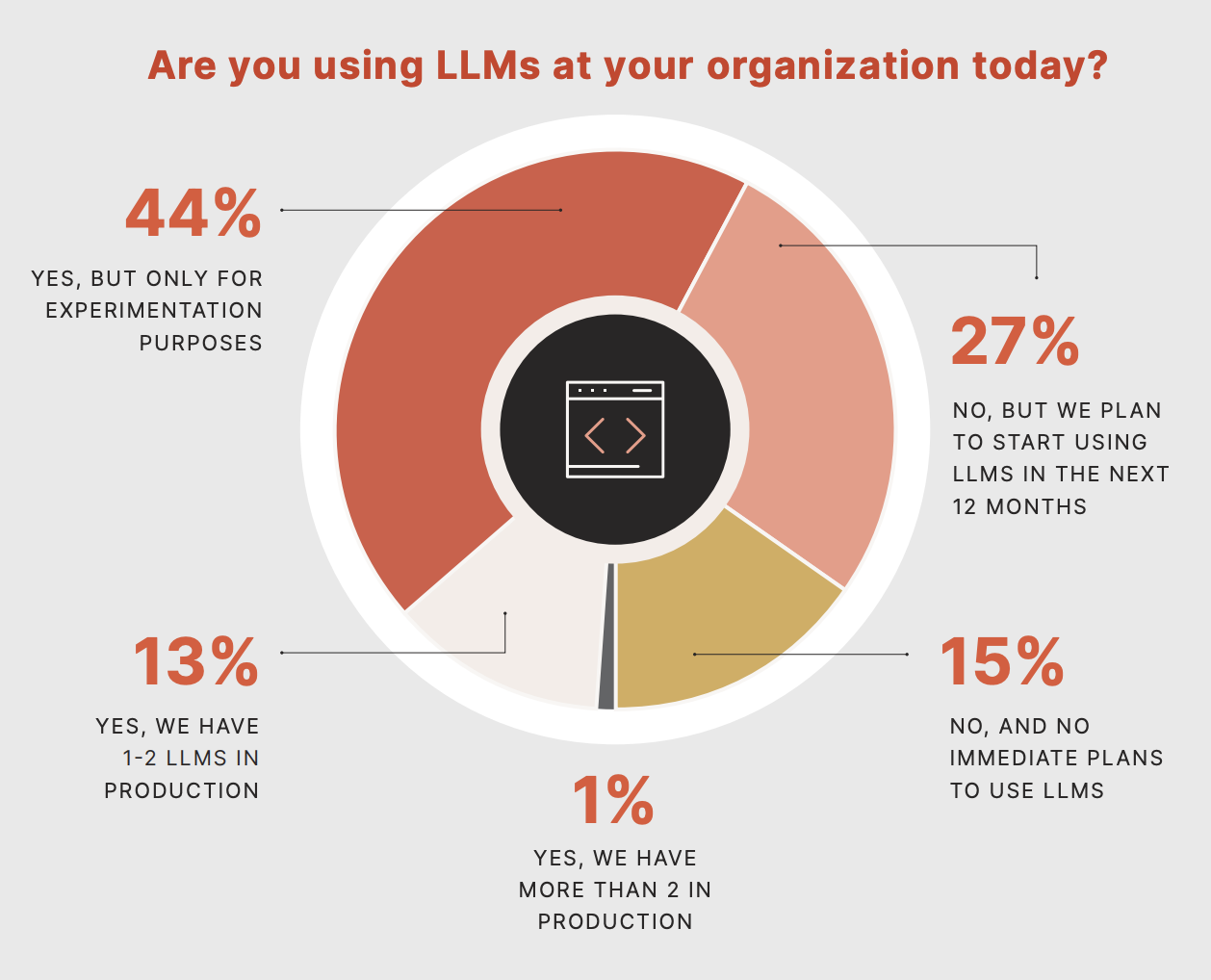

Over half (58%) of the survey respondents either experiment with or have put LLMs into production. Only 15% have no plans. It’s clear that the LLM revolution has begun!

The majority of enterprises are either experimenting with or attempting to productionize LLMs.

Perhaps more interesting is that only 1/4th of surveyed companies are comfortable using commercial LLMs in production. The blocker: sharing data with commercial LLM vendors via APIs is a deal breaker for most organizations. Cost concerns are another primary concern. You can read more about those concerns in the report.

With the almost weekly release of a new and even more powerful open-source model, organizations have countless options when building LLM-powered applications. Increasingly, we're finding that enterprises are choosing to build on top of best-of-breed open-source models deployed in their own environment as an alternative to renting LLMs from commercial providers.

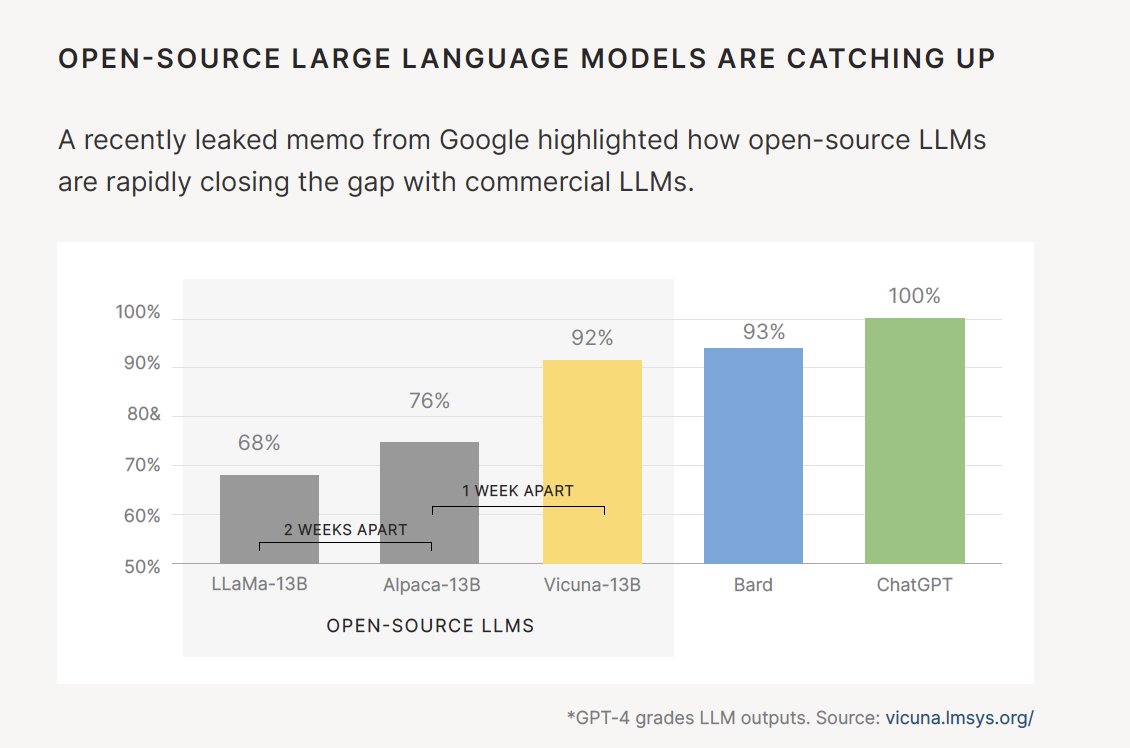

Earlier this year a leaked memo from Google highlighted how open-source LLMs are rapidly closing in on commercial offerings.

“Clearly, companies are investing in the personnel and technologies necessary to work with emerging generative AI technologies in support of production-scale outcomes. The trick, of course, will rest not in building these outcomes, but ensuring that they deliver consistent, secure, responsible outcomes. With an increasing desire to customize and deploy open-source models, enterprises will need to invest in operational tooling and infrastructure capable of keeping up with the rapid pace of innovation in the open-source community. ”

- Bradley Shimmin, Chief Analyst AI platforms, analytics and data management at Omdia

Top Challenges and Solutions for Productionizing LLMs

Top challenges organizations face when putting LLMs into production.

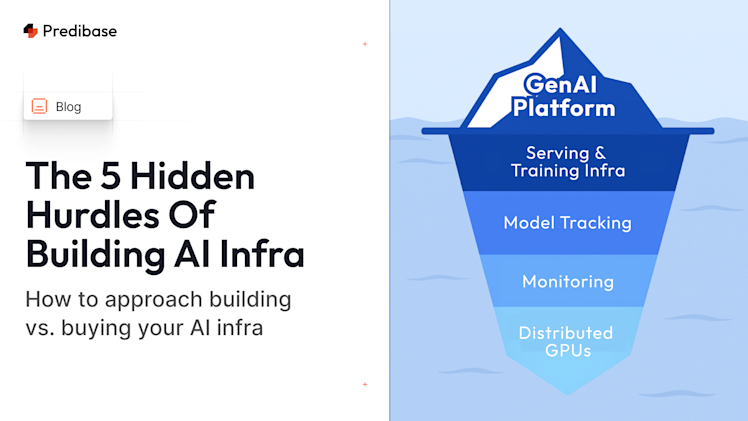

While most enterprises are still in the experimentation phase, some have begun to put LLMs into production. To understand how that journey is progressing, we asked respondents to share the hurdles they face putting LLMs into production. Here are the top five challenges and our recommendations for how to overcome them - ranked by number of responses:

- Sharing Proprietary Data (33%) - the #1 concern is giving up access to proprietary data. Customizing an LLM for your task requires injecting data into the prompt or training the LLM on your data. Organizations should deploy open-source LLMs within their virtual private cloud to overcome this challenge. Deploying in your own cloud enables you to keep ownership of both your data and model IP.

- Customization and Fine-tuning (30%) - this is the 2nd most prominent hurdle organizations face when putting LLMs into production. Customizing LLMs often requires highly curated data along with deep expertise in model training and distributed infrastructure. Organizations should explore configuration-driven model training tools like open-source Ludwig.ai to address the complexities of model training. This simplified approach makes it easy to rapidly iterate and fine-tune models.

- Too Costly to Train (17%) - ideally, everyone would build their own LLM from scratch, but this requires massive compute resources and is out of reach for all but a few organizations. Don’t reinvent the wheel. Build on top of state-of-the-art open-source LLMs like LLaMa-2 by experimenting with customization techniques such as fine-tuning and Retrieval- Augmented Generation (RAG). You can obtain similar results at a lower cost. Compression techniques can also be used to shrink LLMs for task-oriented jobs.

- Hallucinations (12%) - LLMs occasionally provide responses that appear accurate but are not based on factual information. To overcome this, consider using techniques like RAG to provide LLMs with real information and reduce the likelihood of hallucinations. For traditional classification, don’t forget that Supervised ML models may work better for your task. You can also employ fine-tuning to teach the model not to respond when it doesn’t have sufficient information.

- Latency (8%) - many LLM applications, like Chatbots, require sub-second response times. Deploying LLMs on your infrastructure and optimizing for latency and compute costs requires deep expertise in infrastructure. An obvious solution is using a commercial LLM vendor's API, but that can be cost-prohibitive and requires giving up access to your data. Consider an LLM infrastructure provider like Predibase that makes it easy to deploy and serve open-source LLMs within your cloud environment.

Additional Findings on LLM Use Cases and Customization Techniques

In the report, we also explore top use cases for LLMs—such as Information Extraction, Q&A Systems, and Personalization—and deep dive into techniques for customization such as fine-tuning. Download the full report to get all those details and results.

What’s Next? The Path to Putting LLMs into Production

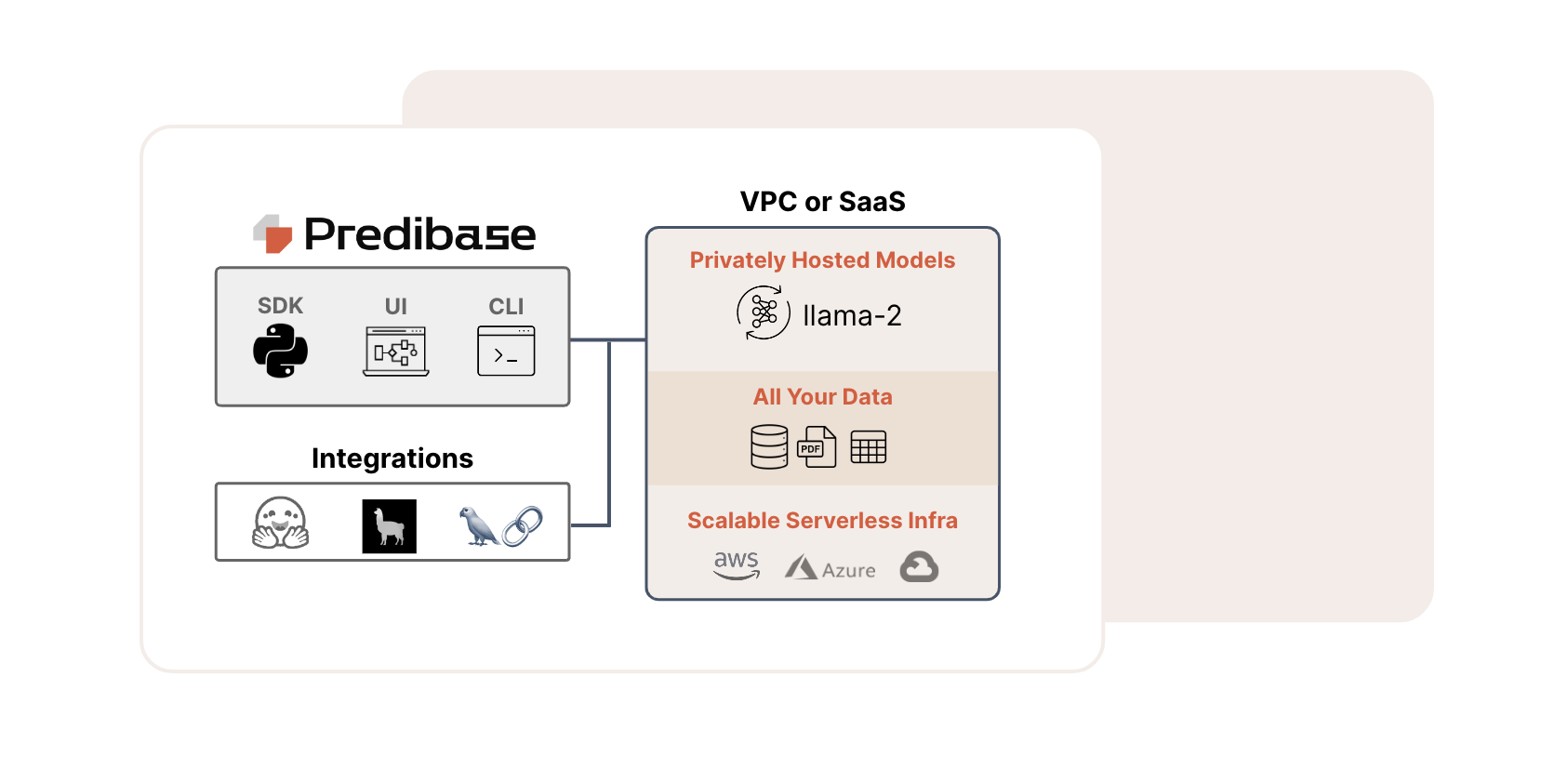

Privately host custom LLMs in your own cloud.

Based on the survey, it’s clear that teams want to customize and deploy open-source LLMs without giving up access to their proprietary data. Predibase addresses these challenges with the first engineering platform for fine-tuning and serving any open-source LLM. Built on best-in-class managed infrastructure, Predibase provides the fastest way for your teams to deploy, operationalize, and customize LLMs on your data in your own cloud.

- Sign-up for a free trial of Predibase to start building your own LLM

- Download the full report: Beyond the Buzz: A Look at LLMs in Production

We see a massive opportunity for customized open-source LLMs to help our teams generate realtime insights across our large corpus of project reports. The insights generated by this effort have big potential to improve the outcomes of our conservation efforts. We’re excited to partner with Predibase on this initiative.

- Dave Thau, Global Data and Technology Lead Scientist, WWF