Join our upcoming webinar, LoRA Exchange: Serve 100s of fine-tuned LLMs on a single GPU, to get hands on with our new product capabilities.

Announcing the Predibase SDK for Efficient Fine-tuning and Serving including Expanded Support for A100s

Today, we are thrilled to announce our new Python SDK for efficient LLM fine-tuning and serving as well as early access to the Predibase AI Cloud with access to A100 GPUs. This new offering enables developers to train smaller, task-specific LLMs using any GPU within their cloud or ours. Fine-tuned models can then be served using our lightweight, modular LLM serving architecture called LoRA Exchange (LoRAX) that dynamically loads and unloads models on demand in seconds. This allows multiple models to be served without additional costs. This approach to fine-tuning and serving is so efficient that we are excited to offer unlimited fine-tuning and serving of LLaMa-2-13B for free in a 2-week trial.

Challenges with LLMs in Production

Over 75% of organizations have no plans to put commercial LLMs into production—sharing data and giving up ownership of models to LLM vendors like OpenAI or Anthropic is effectively a non-starter. When you compare the long-term costs of commercial models and open-source models, it’s evident that the ROI of commercial LLMs is often highest during the prototyping stage. If you consider your data, models, and weights as your intellectual property (“IP”), renting out your IP by using commercial LLM would be reckless in the long-term. In fact we believe that an enterprise’s IP should define their moat – a sustainable, competitive edge that is not easily replicable by others. And so the question we often pose to customers is “Why rent your IP when you can own it?”

As enterprises look to answer this question, they are increasingly looking to productionize open-source LLMs in their cloud. Today, this comes with its own set of infrastructure challenges. For example, serving the latest and greatest open-source LLM—like LLaMA-2-70b and Falcon-180b—can cost over $10,000 a month on dedicated hardware in a private cloud. The alternative approach of fine-tuning a smaller model to get equivalent performance for a specific task can also be cost-prohibitive. If you are one of the lucky few with access to A100/H100 GPUs in the cloud, your training costs can reach thousands of dollars per job due to a lack of automated, reliable, and cost-effective fine-tuning infrastructure. Additionally, countless engineering hours are wasted debugging CUDA out-of-memory errors, maximizing GPU utilization, and complex multi-node distributed training. Fine-tuned models then need to be evaluated and compared against each other requiring more resources and expensive deployments before even getting to the cost of serving in production.

From General to Specialized AI

Predibase aims to solve these pain points through a new approach to LLM fine-tuning and serving called Specialized AI. While recent interest in large language models has come from consumers experiencing the broad, emergent capabilities of LLMs like GPT-4, we think the future for enterprises looks very different. There are two primary drivers that inform this opinion:

- Getting LLMs into production is surprisingly difficult and expensive

- Enterprises don’t really need general intelligence, they need task-specific models

Given this reality, one natural approach for organizations to get the benefit of LLMs is to focus their attention on domain-specific models that can deliver value. Indeed, at Predibase, we believe the future for LLM enterprise adoption will not look like one general model, but rather a series of hundreds of specialized, fine-tuned models. We are bullish on fine-tuning being able to unlock new levels of efficiency, speed, and cost-effectiveness for all those pursuing AI application development.

Introducing the Brand-New Predibase Python SDK

Rather than boiling the ocean with massive 70b+ parameter models, Predibase enables engineers to train smaller, task-specific LLMs using commodity GPU hardware like NVIDIA T4s within their own cloud. Fine-tuned models are seamlessly served using Predibase’s lightweight, modular LLM serving architecture that dynamically loads and unloads models on demand in a matter of seconds.

For users looking to productionize open-source LLMs, Predibase is excited to announce our brand-new Python SDK with the following benefits:

- Automatic Memory-Efficient Fine-Tuning: Predibase takes any open-source LLM and compresses it down to a form that can train on even the cheapest and most readily available GPUs. Built on top of the open-source Ludwig framework, users need to only specify the base model and dataset, and Predibase’s training system will automatically apply 4-bit quantization, low-rank adaptation, memory paging / offloading, and other optimizations to ensure training succeeds on whatever hardware is available at the fastest speeds possible in only a few lines of code.

- Serverless Right-Sized Training Infrastructure: Predibase’s in-house orchestration logic will find the most cost-effective hardware in your cloud to run each training job, with built-in fault tolerance, metric and artifact tracking, and one-click deployment capabilities.

- Cost-Effective Serving for Fine-Tuned Models: Each LLM deployment can be configured to scale up and down with traffic, and can be hosted either standalone or dynamically. Dynamically served LLMs can be packed together with hundreds of other specialized fine-tuned LLMs, resulting in over 100x cost reduction compared with dedicated deployments. Each fine-tuned LLM can be loaded and queried in a matter of seconds following fine-tuning. No need to deploy each model on a separate GPU. See LoRA Exchange (LoRAX) to learn more.

Introducing Predibase AI Cloud

As part of today’s launch, we are also revealing the Predibase AI Cloud that is currently in early access for select customers. Predibase AI Cloud is the fully managed offering of the Predibase platform where we manage the infrastructure and compute on your behalf. Specifically, users can take advantage of:

- Instantly Available, High-End GPUs: Reliable, efficient fine-tuning on highly-performant A100 and H100 GPU clusters

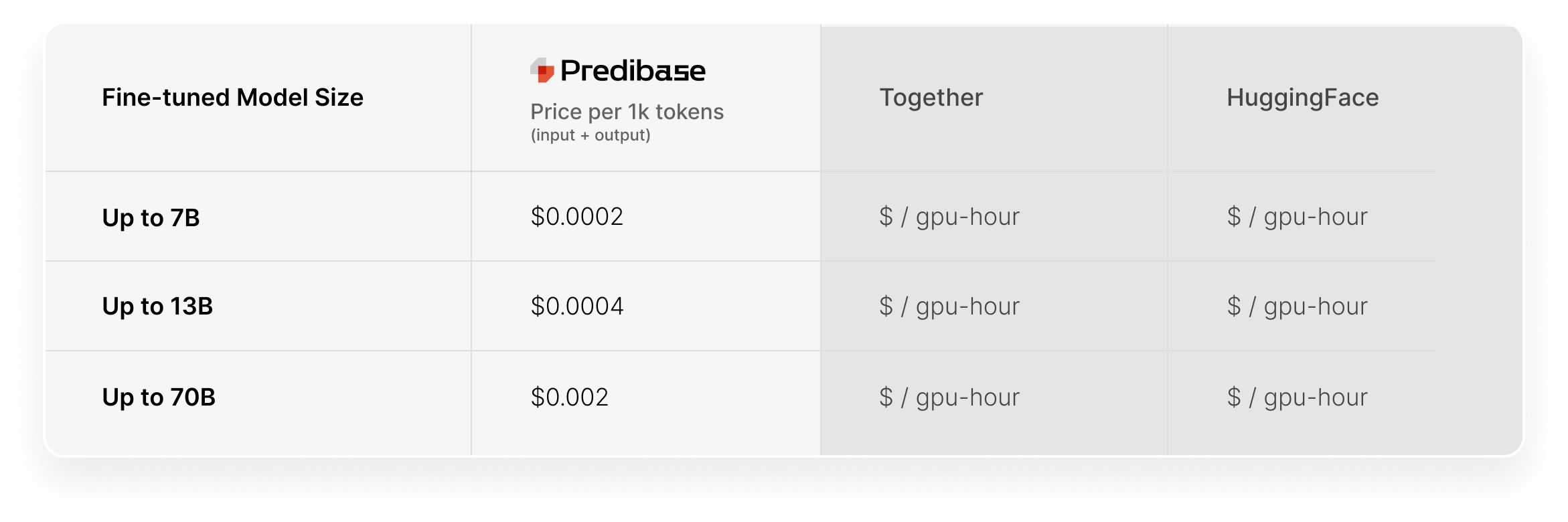

- Industry-Leading Serving Pricing: Serve fine-tuned Llama-2-7b at $0.20 / 1M tokens

Predibase A100 / H100 Compute Clusters

The Predibase AI Cloud offers efficient fine-tuning and serving of LLMs on any GPU including dedicated, highly performant A100 and H100 GPU clusters.

Predibase AI Cloud customers enjoy benefits including:

- High-End GPUs at Your Fingertips: Never have to worry about GPU availability again. Run the largest fine-tuning jobs at the fastest speeds possible with a dedicated cluster of A100s or H100s. Machines are connected with 200 Gbps Ethernet or up to 3.2 Tbps InfiniBand.

- Best-in-Class Training Stack: Leverage premium hardware together with best-in-class Predibase software. The Predibase training service offers efficient and reliable fine-tuning to meet your needs. Powered by Ludwig and Deepspeed, Predibase allows you to perform complex distributed multi-node training without the hassle.

- Premium Support: Predibase augments the Predibase AI Cloud with a team of ML experts to support you end-to-end on your ML journey. Get access to our exclusive ML training playbook to see how top teams have maximized performance from their training runs. Custom support extends across the entire ML lifecycle including data preparation and generation, fine-tuning parameters, model iteration, and evaluation.

Predibase AI Cloud Pricing

Pricing for Predibase AI Cloud follows a pay-as-you-go model, is in early access for select customers and will be going GA later this year.

For comparison, OpenAI GPT-3.5 charges 8x more for inference on their fine-tuned models than the base model. And most other OSS LLM infrastructure companies don’t give you the option, forcing you to use an expensive $ / GPU-hour pricing model for fine-tuned models.

Predibase supports state-of-the-art, efficient inference for both pre-trained and fine-tuned models at the same, flat per-token price.

Fill out the interest form for access to the A100 Cluster here, check out the full details on pricing here, or reach out to support@predibase.com if you have other questions or are interested in this pricing model.

Getting Started with Predibase Today

Whether you’re interested in fine-tuning and serving LLMs in your own cloud VPC or our managed infrastructure, you can begin training models on the Predibase platform for free today.

- Sign up for your free trial of Predibase to fine-tune and serve Llama-2-13B for free

- Join our upcoming webinar LoRA Exchange: Serve 100s of fine-tuned LLMs on a single GPU to learn more about our state-of-the-art LLM infrastructure