Alibaba has officially launched Qwen 3, its most ambitious and versatile open-source LLM to date. The lineup includes eight models from a lean 0.6B-parameter version ideal for edge devices to a formidable 235B-parameter Mixture of Experts built for serious scale. What sets Qwen 3 apart isn’t just its range—it’s the hybrid reasoning modes (“think fast” or ”think deep”), support for 119 languages, and top-tier performance that rivals the best frontier models in math, coding, and general intelligence.

If you’re excited to start using Qwen 3, but have concerns about data privacy or don’t want to wrestle with building scalable infra, then we have you covered. The complete Qwen 3 model family is now available for high-speed serving and fine-tuning on private serverless deployments in the Predibase cloud or your VPC on AWS, GCP, and Azure—no need to share sensitive data. We also offer shared serverless endpoints for teams that just want to experiment with Qwen 3.

Serve and fine-tune Qwen 3 with Predibase:

- Start a Predibase free trial to get $25 in credits to experiment with Qwen 3 on shared endpoints (not for production use cases).

- Contact our team to set-up a private, production-grade deployment of Qwen 3 on highend GPUs—available in your cloud or ours.

Qwen 3: Flexible, Fully-Open, and Firmly Placed Atop the Charts

The Qwen 3 model family is a complete refresh of Alibaba’s open-weight line-up of Qwen models, designed to push the boundaries of performance and scalability. It's offered in 8 different sizes to serve your specific needs:

- Dense Models: 0.6B, 1.7B, 4B, 8B, 14B, 32B

- Mixture of Experts (MoE) Models: 30B-A3B, 235B-A22B.

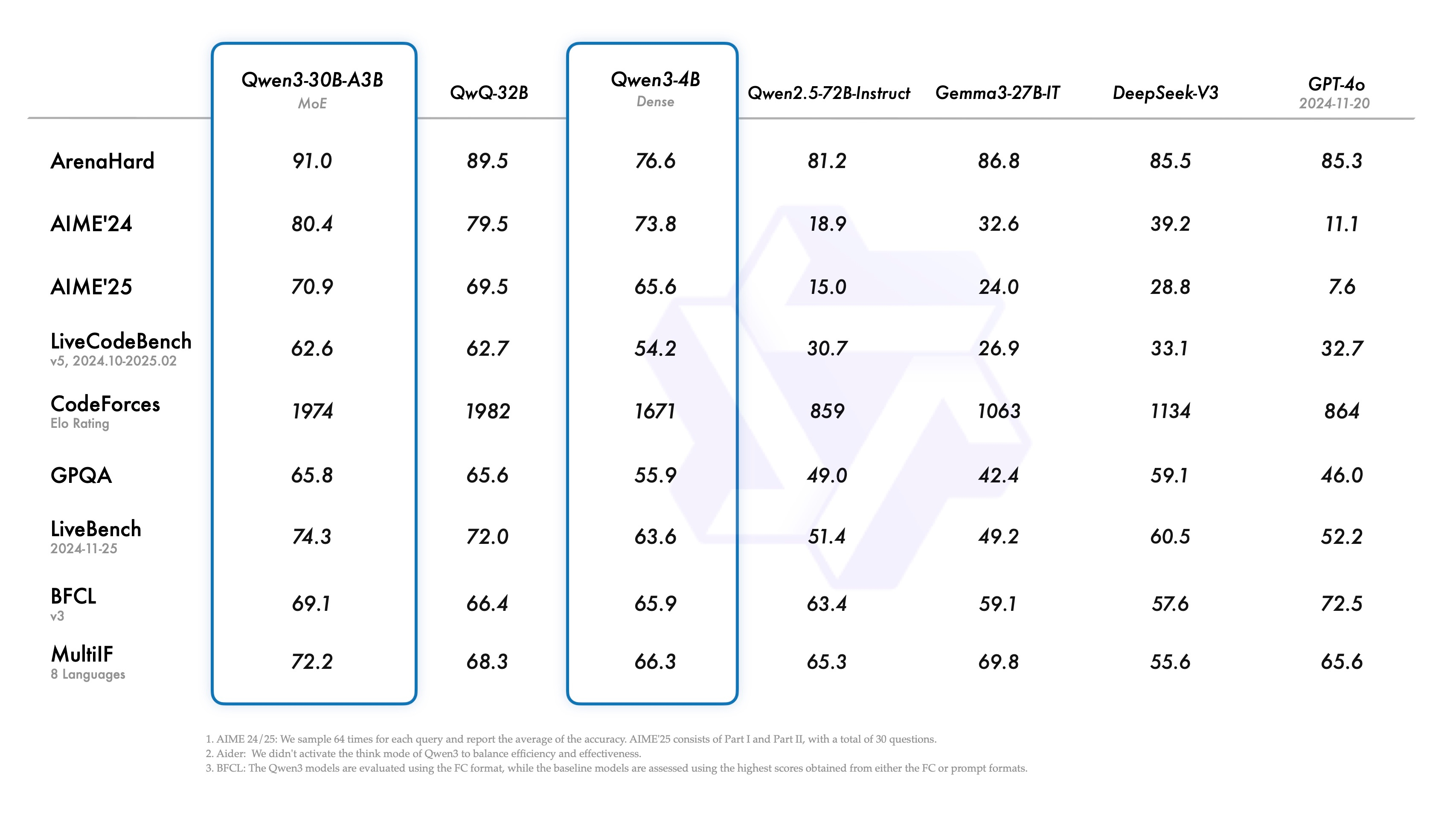

Qwen 3 builds on improved training techniques to deliver performance that rivals much larger models. Impressively, the compact MoE model, Qwen3-30B-A3B, outperforms QwQ-32B with 1/10th as many active parameters. Even the lightweight Qwen3-4B punches well above its weight, delivering comparable performance to its much larger predecessor, Qwen2.5-72B-Instruct.

Key Highlights of Qwen 3:

- Hybrid Thinking Modes enables users to toggle between fast responses and deep reasoning via prompt controls. Need chain-of-thought reasoning capabilities to tackle a complex logic problem? Switch to thinking mode. Want speed? Swap back to non-thinking mode. With this approach, you control the cost and quality via prompting.

- Multilingual Support spanning 119 languages, including English, French, German, Chinese, Japanese, and much more. This unlocks new international use cases right out of the box, such as translation, global customer support, and more.

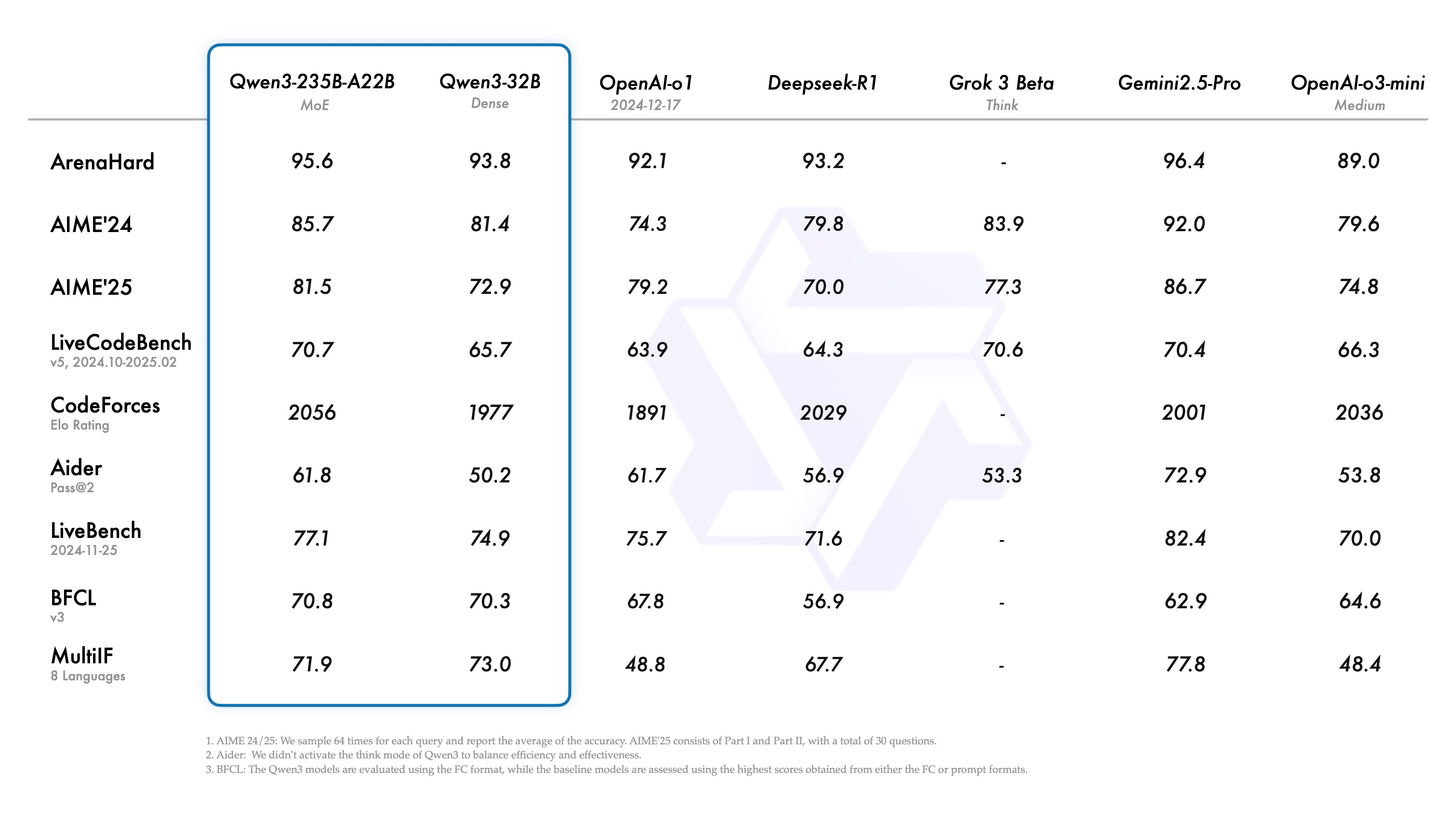

- Advanced Coding & Math Capabilities that rival top-tier models like DeepSeek-R1, GPT-4o, GPT-o1, Grok-3, and Gemini-2.5-Pro in Alibaba’s benchmarks.

- Efficient MoE Architecture activates only a fraction of parameters during inference (e.g., 22B active out of 235B total), delivering state-of-the-art performance with reduced resource cost.

- Expanded Pretraining Dataset—2x the number of tokens used to train Qwen2.5—and multi-stage post-training ensure robust reasoning, instruction following, and long-context performance (up to 131k tokens).

- Tuned for Advanced Agentic Capabilities, Qwen 3 excels at tool use (MCP) and executing complex multi-step tasks.

How to Serve Qwen 3 in Your VPC or Predibase Cloud

Predibase makes it simple to securely deploy all Qwen 3 variants in your cloud with our Virtual Private Cloud (VPC) offering on AWS, GCP, and Azure. Alternatively, you can leverage private deployments on our SaaS infrastructure to serve these models with high-speed inference, while still ensuring robust data security.

In this guide, we’ll show you how to deploy and get started fine-tuning Qwen 3 in just a few lines of code.

Why You Should Colocate LLM Training and Serving

Even with the best pre-trained LLMs, you’ll want an inference stack that supports continuous training so you can optimize your models once in production. Combining your training and serving infrastructure offers the following benefits:

- Continual Improvement: Your models shouldn’t stay stagnant. A unified infrastructure enables real-time updates and fine-tuning as you gather more production data and model feedback.

- Cost Efficiency: Shared GPU resources reduce operational overhead. Efficiently allocate and adjust GPU resources across training and serving jobs as needs fluctuate.

- Faster Iteration: Minimize latency and simplify model deployments. Move from model customization to production serving in just a few clicks or lines of code.

Predibase seamlessly handles both training and inference on a unified platform, whether deployed within your VPC or via our private SaaS offering.

Hardware Requirements for the Qwen 3 Series

The Qwen 3 model comes in a wide range of sizes, with the larger variants requiring substantial compute power. Here’s an overview of the GPU requirements to serve the different models:

Model Name | Optimal GPU Configuration | Minimum GPU Configuration |

|---|---|---|

Qwen3-8B | 1x H100 / 1x L40s | 1xL40S (With FP8 Quantization) |

Qwen3-14B | 1x H100 | 1xL40S (With FP8 Quantization) |

Qwen3-32B | 1x H100 | Not recommended on smaller GPUs |

Qwen3-30B-A3B | 1x H100 | Not recommended on smaller GPUs |

Qwen3-235B-A3B | 4 x H100 | Not recommended on smaller GPUs |

GPU Availability Considerations

While high-end GPUs, such as H100s, are ideal, they are in short supply among cloud providers. If securing GPUs from your cloud provider is challenging, Predibase’s fully managed SaaS deployments offer a reliable alternative with pre-allocated infrastructure and competitive GPU pricing.

Tutorial: Deploying Qwen 3 on Predibase

For production workloads with throughput/latency SLAs, Predibase customers can get started running any of the Qwen 3 models in one of two ways:

Option 1: Deploy Qwen 3 in Your VPC

Suppose data privacy, security, or compliance is a top concern for you. In that case, Predibase makes it easy to deploy Qwen 3 with complete control over your data, model weights, and infrastructure through private cloud deployments.

For example, if you're a Predibase VPC customer looking to deploy Qwen3-32B in your cloud environment, just run these few lines of code:

# Install Predibase

!pip install predibase

# Import and setup Predibase

from predibase import Predibase, DeploymentConfig

pb = Predibase(api_token="pb_your_token") # Grab token from Home Page

# Create Qwen 3 deployment

deployment = pb.deployments.create(

name="qwen3-32b",

config=DeploymentConfig(

base_model="Qwen/Qwen3-32B",

accelerator="h100_80gb_sxm_800",

min_replicas=1,

max_replicas=1,

cooldown_time=1800,

speculator="disabled",

max_total_tokens=40000,

backend="v2"

)

)

# Prompt it!

client = pb.deployments.client("qwen-32b", force_bare_client=True)

print(client.generate("Hello Qwen 32B!", max_new_tokens=128).generated_text)Sample code for deploying Qwen 3 in your VPC with Predibase

Option 2: Deploy Qwen 3 via Predibase SaaS

For teams that want a secure, high-performance Qwen 3 deployment without worrying about GPU capacity constraints, Predibase offers private, fully-managed serving infra with a broad range of GPU types at competitive prices.

Advantages of Predibase SaaS:

- No GPU procurement required: Predibase has pre-allocated H100 clusters.

- Faster time-to-deployment: Deploy in minutes, not days.

- Fully managed scaling: Auto-adjusts the number of instances (replicas) to handle workload spikes without a pause in performance.

- Security & Compliance: We provide private deployments that meet the stringent security requirements of enterprises. Your deployments are never shared with other customers, and your data is yours forever. Our GPU data centers all reside in the United States and meet the strictest security standards; we are fully SOC 2 Type 2 compliant.

With this option, customers still get a private deployment without worrying about securing scarce compute resources. Setting up a Qwen 3 deployment on our SaaS only requires a few lines of code:

# Install Predibase

!pip install predibase

# Import and setup Predibase

from predibase import Predibase, DeploymentConfig

pb = Predibase(api_token="pb_your_token") # Grab token from Home Page

# Create Qwen 3 deployment

deployment = pb.deployments.create(

name="qwen3-32b",

config=DeploymentConfig(

base_model="Qwen/Qwen3-32B",

accelerator="h100_80gb_sxm_800",

min_replicas=1,

max_replicas=1,

cooldown_time=1800,

speculator="disabled",

max_total_tokens=40000,

uses_guaranteed_capacity=True,

backend="v2"

)

)

# Prompt it!

client = pb.deployments.client("qwen-32b", force_bare_client=True)

print(client.generate("Hello Qwen 32B!", max_new_tokens=128).generated_text)Sample code for running Qwen 3 on private serverless deployments with Predibase

Experimentation Purposes Only

If you’re looking to simply test out Qwen 3, then we also offer shared serverless endpoints of the 4B and 18B variants. Sign-up for a Predibase Free Trial to instantly prompt the models. Please note that shared serverless endpoints are not intended for use in production environments. For maximum throughput and guaranteed SLAs, please contact our team to set-up a private deployment on H100s.

Customize Qwen 3 for Your Use Case with Fine-tuning

Even with Qwen 3’s impressive out-of-the-box capabilities, fine-tuning lets you tailor the model to your domain, tone, and specific workflows—whether that’s legal reasoning, medical advice, or your brand voice. Customization also enhances performance on proprietary data, such as internal codebases, unlocking deeper accuracy and relevance for your real-world applications.

Predibase makes fine-tuning faster, easier, and more scalable—especially for open-weight models like Qwen 3. We offer a fully managed, end-to-end platform for both supervised and reinforcement fine-tuning (RFT), along with built-in experiment tracking, versioning, and deployment capabilities. With multi-LoRA serving embedded into our inference stack, serving fine-tuned models is fast and efficient at scale.

Get started with Supervised Fine-tuning only requires a few lines of code:

# Create an adapter repository

repo = pb.repos.create(name="news-summarizer-model", description="TLDR News Summarizer Experiments", exists_ok=True)

# Start a fine-tuning job, blocks until training is finished

adapter = pb.adapters.create(

config=FinetuningConfig(

base_model="qwen3-30b-a3b"

),

dataset=dataset, # Also accepts the dataset name as a string

repo=repo,

description="initial model with defaults"

) Sample code for customizing Qwen 3 with supervised fine-tuning

Additionally, Predibase is the only platform to offer a fully managed solution for reinforcement fine-tuning. Here's example code for kicking off an RFT training job with Predibase:

from predibase import GRPOConfig, RewardFunctionsConfig

adapter = pb.adapters.create(

config=GRPOConfig(

base_model="qwen3-30b-a3b",

reward_fns=RewardFunctionsConfig(

functions={

"format": format_reward_func,

"answer": equation_reward_func,

},

)

),

dataset="my_code_repository",

repo=repo,

description="..."

)Sample code for training Qwen 3 with reinforcement fine-tuning

Check out the Predibase Docs for detailed use case examples and tutorials for fine-tuning open-source LLMs.

Get started with Qwen 3 today

Deploying Qwen 3 doesn’t have to be a headache. Whether you choose to deploy in your VPC with Predibase or opt for a fully managed private deployment, we’ve got you covered.

✅ Deploy in Your VPC: Ensure full control over infrastructure with Predibase’s private cloud offering.

✅ Leverage Predibase SaaS: Skip the hassle of GPU procurement and let us manage scaling.

✅ Secure & Private Deployments: Both VPC and SaaS ensure enterprise-grade security and compliance.

Get in touch to discuss your Qwen 3 deployment or sign-up for a free trial to get $25 of credit for serving and fine-tuning!

FAQ: Frequently Asked Questions about Qwen 3

What is Qwen 3?

Qwen 3 is Alibaba Cloud’s latest family of open-source large language models, ranging from 0.6B to 235B parameters, and designed for tasks like coding, math, reasoning, and multilingual communication. The model leverages improved training techniques to outperform its predecessor, Qwen 2.5 with a smaller footprint and other popular commercial models like DeepSeek, GPT-4o, and Gemini across a series of benchmarks.

What are key features of Qwen 3?

Trained on twice as many tokens as its predecessor, Qwen 3 provides advanced reasoning capabilities, including a new hybrid “thinking” mode—fast response or step-by-step reasoning controlled via prompting. Qwen 3 also supports 119 languages, unlocking international use cases right out of the box. The model is available in 8 different sizes, ranging from the compact 0.6B, suitable for edge devices, to the powerful 235B Mixture of Experts (MoE) model, optimized for high performance. Qwen 3 is also tuned for agentic workflows with native support for MCP.

Is Qwen 3 open-source?

Yes, Qwen 3 is fully open-source and free to use. All models in the Qwen 3 family, including dense and Mixture of Experts (MoE) variants, are released under the Apache 2.0 license, making them free to use, modify, and integrate into both research and commercial applications.

How does Qwen 3 perform vs DeepSeek and GPT-4o on benchmarks?

According to Alibaba’s benchmarks, Qwen 3 delivers standout performance across a wide range of benchmarks. Their flagship MoE model, Qwen3-235B-A22B, competes competitively with top-tier models like GPT-4o, DeepSeek-R1, and Gemini 2.5 in areas such as coding, math, and general reasoning. Even the smaller variants like Qwen3-4B match or exceed the performance of much larger models from the Qwen2.5 series, making Qwen 3 one of the best-performing open-weight model families currently available in the open-source.

Performance Benchmarks of Qwen 3 vs. GPT-o1, DeepSeek-R1, Grok 3 and Gemini 2.5

Performance Benchmarks of Qwen 3 vs. Qwen 2.5, Gemma 3, GPT-4o, Gemma 3 and DeepSeek-V3

What are the GPU requirements for serving Qwen 3?

Qwen 3 comes in a wide range of sizes with different GPU needs to run them efficiently. The minuscule 0.6B model can run on edge devices, but the more powerful models require high-end H100s. Here’s a quick guide to the GPU requirements for running the various Qwen 3 models:

- Qwen3-8B and Qwen3-14B require a single H100 or L40s

- Qwen3-32B and Qwen-30B-A3B MoE require a single H100

- Qwen3-235B-A3B MoE requires four H100s

How do I run Qwen 3?

You can deploy the full series of Qwen 3 models in your own cloud (VPC on AWS, GCP, or Azure) or use a fully managed, private SaaS deployment via Predibase. Both options provide enterprise-grade privacy and high-speed low low-latency inference.

Can I serve Qwen 3 in my private cloud (VPC)?

Yes. Predibase enables private cloud deployments of any of the Qwen 3 model via VPC infrastructure, allowing teams to meet compliance requirements and maintain complete control over their data, GPUs, and model artifacts.

What are some open-source alternatives to Qwen 3?

Notable alternatives include Mistral, Llama 4, DeepSeek, Gemma, and Phi models. However, Qwen stands out for its strong performance on benchmarks for math, coding, and reasoning tasks, massive support for languages, and wide range of sizes (0.6B to 235B), including its Mixture of Experts variants.

Can I fine-tune Qwen 3 for my use case?

Absolutely. Fine-tuning Qwen 3 allows you to adapt the model to your specific domain, data, or task. Platforms like Predibase simplify this process, offering a fully managed, end-to-end service for popular LoRA-based training including supervised and reinforcement fine-tuning (RFT). Additionally, Predibase offers built-in experiment tracking and collaboration features as well as low-latency, high-throughput serving in production.

What is Qwen-Agent and how does it work?

The Alibaba team recommends using Qwen-Agent to make the best use of Qwen 3’s agentic capabilities. Qwen-Agent is a function-calling framework built into the Qwen 3 ecosystem. It supports Qwen-style, GPT-style, and LangChain-compatible APIs for building powerful AI assistants that can utilize external tools, such as web browsers, code interpreters, and domain-specific APIs.