Earlier this year, Mistral AI released Mistral-7b, a popular open-source LLM that skyrocketed to the top of the HuggingFace leaderboard given its small size and strong performance against the larger Llama-2-13b. Only a few months later, the same team released Mixtral 8x7B, one of the first successful open-source implementations of the Mixture of Experts architecture (MoE). MoE is rumored to be the same architecture implemented by GPT4.

Fine-tuning open-weight language models like Mixtral 8x7B or Mistral 7B can dramatically boost performance for domain-specific tasks. But getting started with fine-tuning—especially on large mixture-of-experts models—can feel daunting.

That’s why we created this step-by-step guide using Ludwig, our open-source declarative ML framework, to help you fine-tune Mistral and Mixtral models with minimal setup.

👉 Get the code on GitHub to start fine-tuning Mistral and Mixtral today—no boilerplate training loops, no headaches.

Mixtral 8x7B is an exciting development as it demonstrates the incredible performance of a novel open-source model and narrows the gap with commercial LLMs. However, if you plan to use Mixtral for your use case, you'll likely want to fine-tune it on your task-specific data to improve performance (we believe the future is fine-tuned and you can read about our research on the topic in this recent post: Specialized AI).

Developers getting started with fine-tuning oftentimes hit roadblocks implementing the optimizations needed to train models quickly and reliably on cost-effective hardware. To help you avoid the dreaded OOM error, we’ve made it easy to fine-tune Mixtral 8x7B for free on commodity hardware using Ludwig—a powerful open-source framework for highly optimized model training through a declarative, YAML-based interface. Ludwig provides a number of optimizations out-of-the-box—such as automatic batch size tuning, gradient checkpointing, parameter efficient fine-tuning (PEFT) and 4-bit quantization (QLoRA)—and has a thriving community with over 10,000 stars on github.

Let's get started!

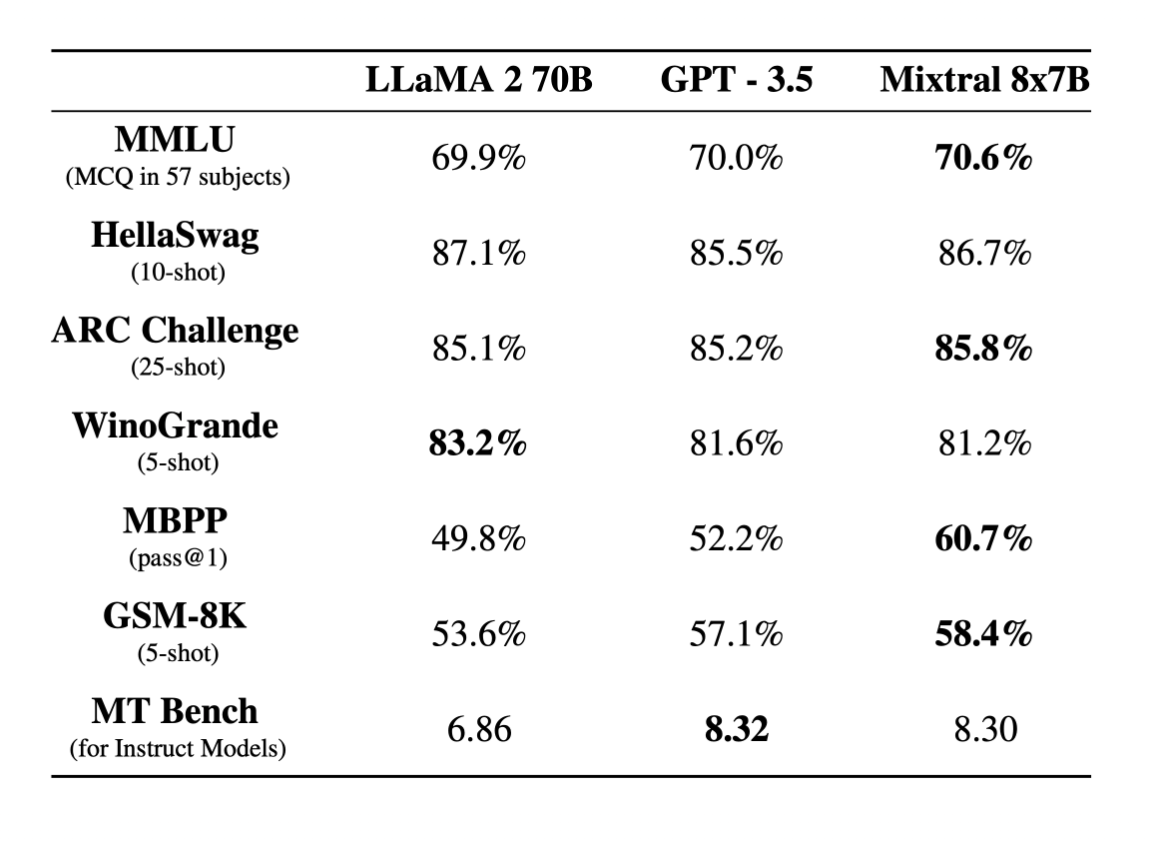

Mixtral 8x7b Performance Benchmarks

As we mentioned earlier, Mixtral 8x7B has shown strong results in comparison to the significantly larger Llama-2-70b against a number of benchmarks:

Mixtral 8x7b base model performance against LLaMa-2-70B. Image source: https://mistral.ai/news/mixtral-of-experts/.

However, we want to see just how much further we can push its performance through the power of task-specific fine-tuning.

Our Fine-tuning Dataset

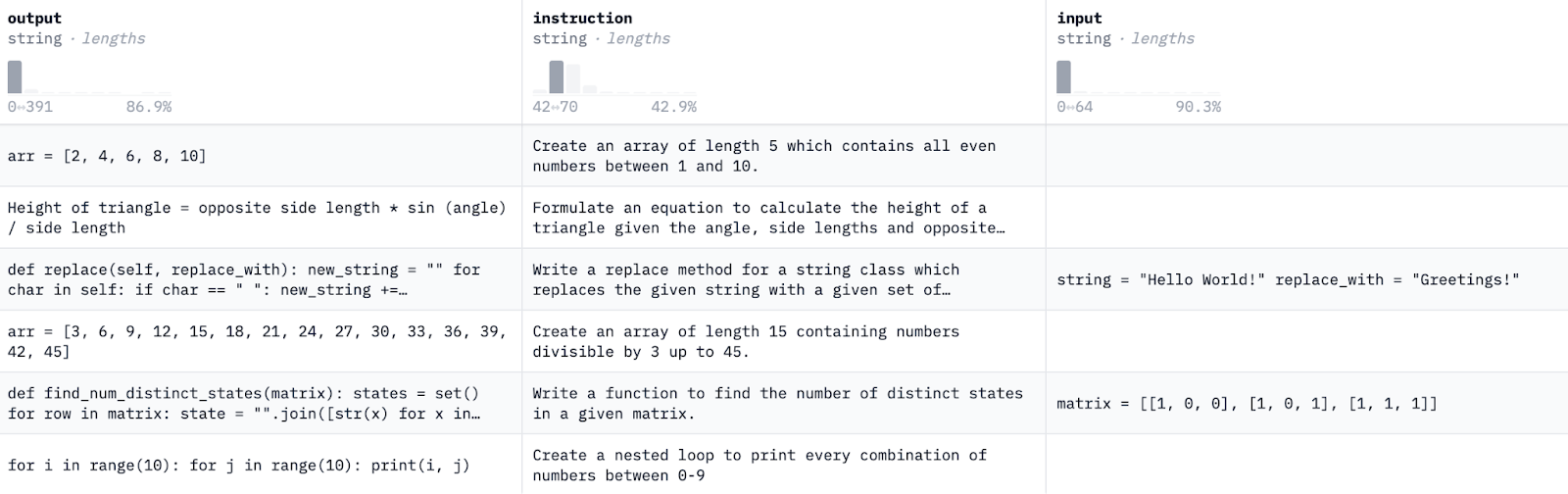

For this fine-tuning example, we will be using CodeAlpaca-20k, a code generation dataset that provides an instruction, a potential input, and an output:

A screenshot of the CodeAlpaca-20k dataset that we used to fine-tune Mixtral 8x7b.

How to Fine-tune Mixtral 8x7b

In order to feasibly fine-tune a 47B parameter model, we used 4-bit quantization, adapter-based fine-tuning, and gradient checkpointing to reduce the memory overhead as much as possible. By doing so, we were able to fine-tune Mixtral 8x7B on 2 A5000s.

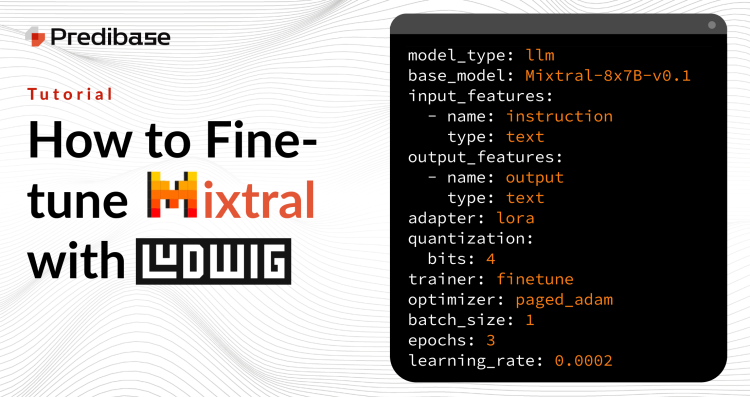

As Ludwig is a declarative framework, all you need to do to fine-tune an LLM is provide a simple configuration. Here’s what we used for fine-tuning Mixtral:

model_type: llm

input_features:

- name: instruction

type: text

output_features:

- name: output

type: text

preprocessing:

global_max_sequence_length: 256

prompt:

template: >-

Below is an instruction that describes a task, paired with an input that

provides further context. Write a response that appropriately completes the

request.

### Instruction: {instruction}

### Input: {input}

### Response:

adapter:

type: lora

trainer:

type: finetune

optimizer:

type: paged_adam

epochs: 3

gradient_accumulation_steps: 16

enable_gradient_checkpointing: true

backend:

type: local

base_model: mistralai/Mixtral-8x7B-v0.1

quantization:

bits: 4Sample Ludwig code for fine-tuning Mixtral 8x7b.

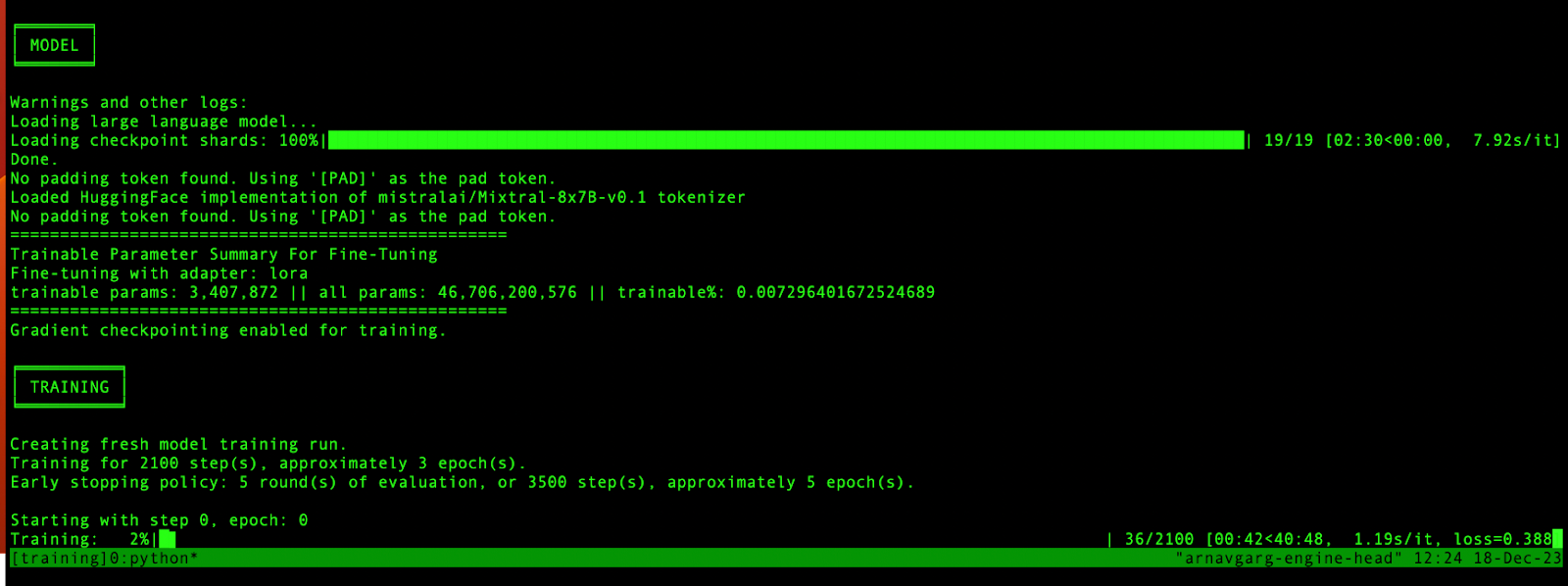

After you kick off your training job, you should see a screen that looks like this:

Screenshot of our Ludwig fine-tuning job for Mixtral 8x7b.

Congratulations, you’re now fine-tuning Mixtral 8x7b!

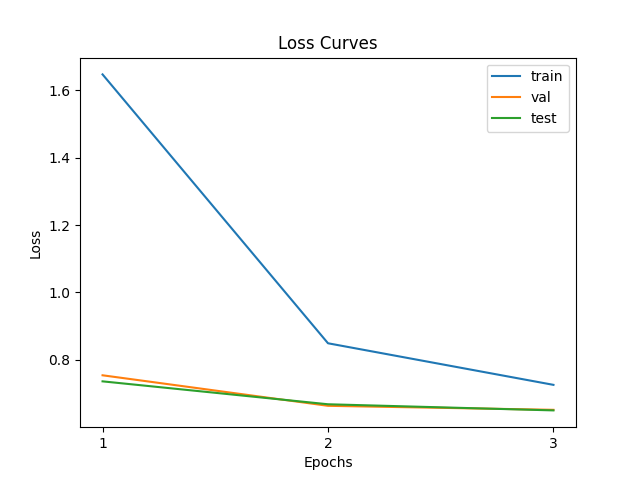

If you’re interested in how the training should go, here are the loss curves from our training run for reference:

Loss curves from our Mixtral training run.

Next Steps: Fine-tune Mixtral 8x7b for Your Use Case

In this short tutorial, we showed you how to easily, reliably and efficiently fine-tune Mixtral 8x7b on readily available hardware using open-source Ludwig. Try it out and share your results with our Ludwig community on discord.

If you're interested in fine-tuning and serving LLMs on managed cost-effective serverless infra within your cloud, then check out Predibase. Just sign up for our free 2-week trial, and train and serve any open-source LLM including Mixtral, Llama-2 and Zephyr on different hardware configurations all the way from T4s to A100s. For serving fine-tuned models, you can choose from spinning up a dedicated deployment per model, or packing many fine-tuned models into a single deployment for massive cost savings without sacrificing throughput and latency. Check out our open-source project LoRAX to learn more about our novel approach to model serving.

If you're looking to push performance even further, consider exploring Reinforcement Fine-Tuning (RFT), a cutting-edge method we recently launched that enables GPT-4-beating accuracy with minimal labeled data.

FAQs

What is Mixtral fine-tuning and how does it work?

Mixtral fine-tuning is the process of customizing the open-weight Mixtral 8x7B model for specific tasks or domains. By using tools like Ludwig, an open-source declarative machine learning framework, developers can fine-tune Mixtral models efficiently without writing extensive custom training code. This allows users to plug in their data, adjust configuration files, and train powerful models suited to their unique needs.

How do I fine-tune Mistral 7B using Ludwig?

To fine-tune Mistral 7B using Ludwig, you simply need a YAML config, your training data, and access to the Ludwig CLI or Python API. Ludwig handles tokenization, model setup, and training behind the scenes. At Predibase, we provide step-by-step guides and infrastructure to accelerate this process. Check out our Mixtral fine-tuning tutorial above for hands-on instructions that apply to Mistral models too.

Is there a GitHub repository for Mistral fine-tuning?

Yes! You can find open-source resources for Mistral fine-tuning on GitHub, including Ludwig configurations and sample datasets. Visit our Ludwig GitHub repository to access code, templates, and documentation that makes fine-tuning Mistral or Mixtral models faster and more reproducible.

Can I fine-tune Mixtral models without labeled data?

Absolutely. With Predibase’s Reinforcement Fine-Tuning (RFT) platform, you can fine-tune Mixtral models using just a handful of labeled examples—or even unlabeled data paired with reward signals. RFT is ideal for reducing labeling overhead while still achieving state-of-the-art performance. Learn more about how it works in our RFT launch blog.

How does Predibase simplify Mistral and Mixtral model tuning?

Predibase simplifies the entire fine-tuning pipeline through a no-code/low-code UI, open-source Ludwig backend, and automated infrastructure. You can deploy fine-tuned Mistral and Mixtral models quickly, integrate RFT for improved accuracy, and avoid the need for extensive engineering or labeled data. Whether you're using GitHub, Python, or the UI, we provide everything needed to go from dataset to deployment with minimal friction.

Why Fine-Tune Mistral with Predibase?

Predibase’s open-source stack enables domain-specific fine-tuning with minimal labeled data—achieving 20%+ higher accuracy than GPT-4 for custom tasks.