As we close out another transformative year at Predibase, we’re excited to share a summary of the most significant advancements and new capabilities we've introduced in the latter half of this year. This review encapsulates how these developments empower you to harness the full potential of fine-tuning and serving models more efficiently and effectively.

If you'd like to see some of these demoed, check out our Predibase Wrapped webinar recording!

Try Predibase yourself for free with $25 in credit for fine-tuning and serving SLMs!

New Model Support

Llama Models

We recently extended our support across the Llama model series, which now includes:

- Llama 3.2 1B, 3B, and 11B offer robust performance across a variety of tasks and are great for fine-tuning.

- Llama 3.3 70B, designed for more complex, nuanced understanding, pushes the boundaries of what's possible with open-source LLMs.

Upstage's Solar Pro

Integration of Upstage's Solar Pro offers our users a model specifically tuned for high-efficiency operations in enterprise environments. This model stands out for its exceptional understanding of domain-specific contexts, making it ideal for industries requiring precise and reliable AI interpretations. Learn more about Solar Pro.

We added dozens more! Check out our docs for a full list.

Vision Language Models (VLM)

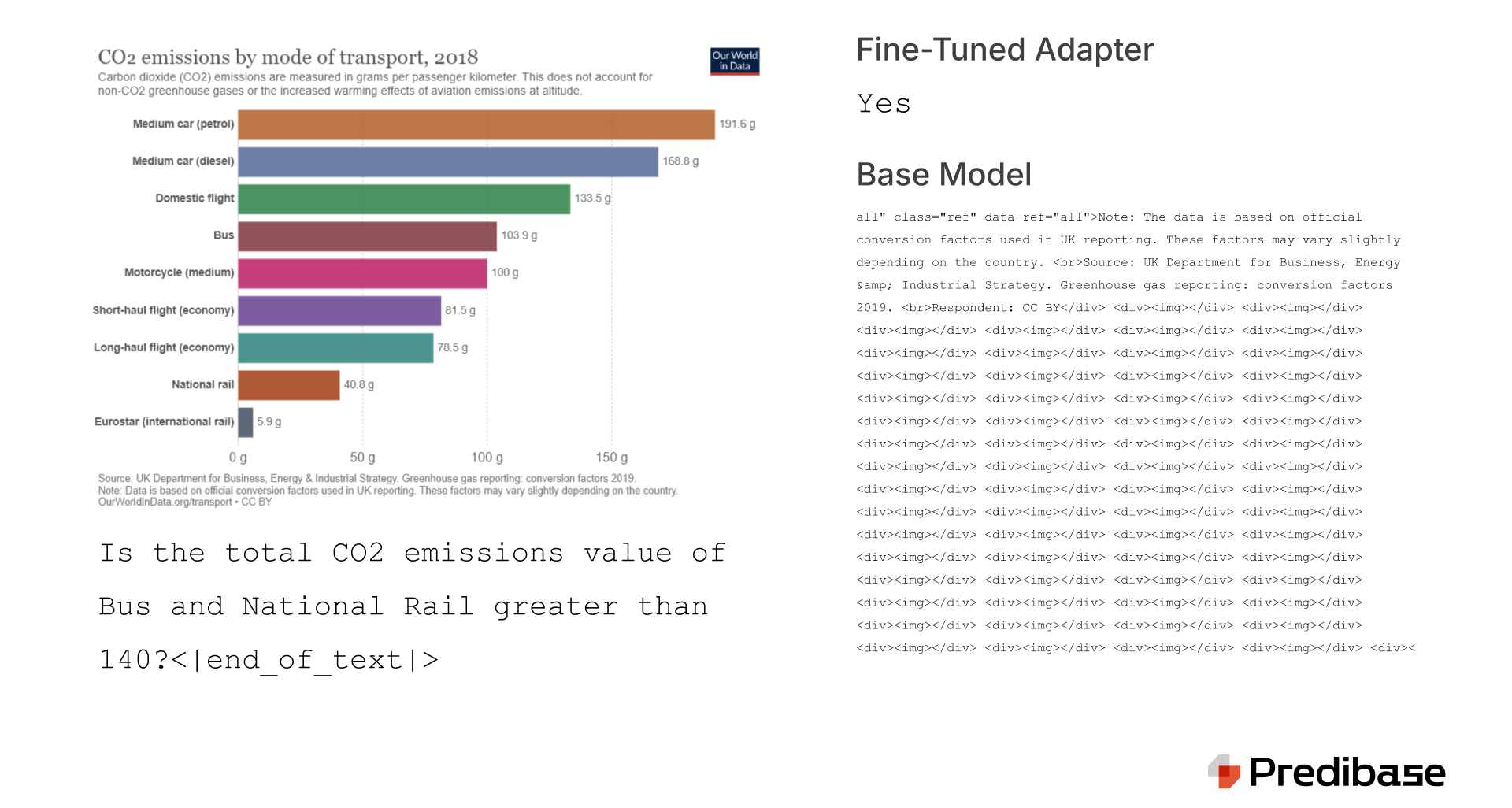

Predibase has significantly enhanced its support for Vision-Language Models (VLMs) this year, which combine image and text processing to perform tasks like image captioning, visual question answering, and document understanding. VLMs are known for their robust zero-shot capabilities, allowing them to adapt to new tasks with no additional training. However, the true utility of VLMs is unlocked through fine-tuning, which tailors models to perform specific tasks with greater accuracy and efficiency.

The fine-tuning process for VLMs, while beneficial, presents unique challenges including complex tooling integration and unreliable GPU performance, which can hinder the efficiency of model training. To combat these issues, Predibase has streamlined the deployment process. Our platform automates the data preprocessing to ensure inputs are correctly formatted and utilizes our LoRAX system to manage the complex serving requirements of diverse VLM architectures, simplifying what has traditionally been a cumbersome process.

By implementing a declarative approach to model configuration, Predibase allows users to easily specify and adjust fine-tuning parameters, removing the need for intricate coding. This approach not only makes VLMs more accessible but also significantly reduces the cost and complexity of deploying highly effective models tailored to specific needs.

Fine-tuning significantly improved the model's response.

Embedding Models

In 2024, Predibase added the ability to serve embedding models, critical for applications in RAG, semantic search, text classification, sentiment analysis. Our updates include support for customizable embedding dimensions, allowing engineers to fine-tune the size and shape of embeddings to match their specific task requirements. This enhancement aids in optimizing the model's performance across various AI tasks by aligning the embeddings more closely with the data characteristics they are meant to represent.

Predibase supports various embedding models, including:

- BERT-based models (e.g., WhereIsAI/UAE-Large-V1 and other BERT-based models)

- DistilBERT and DistilBERT-based models

- MRL Qwen-based models (e.g., dunzhang/stella_en_1.5B_v5)

Customer use case: We operate approximately five replicas of a BERT-based classification system for a prominent U.S.-based consumer finance application, handling over 8.5 million requests daily by classifying each transaction in their production environment. Predibase's specialized serving stack enables us to deliver this model inference service at exceptionally low costs and high throughput.

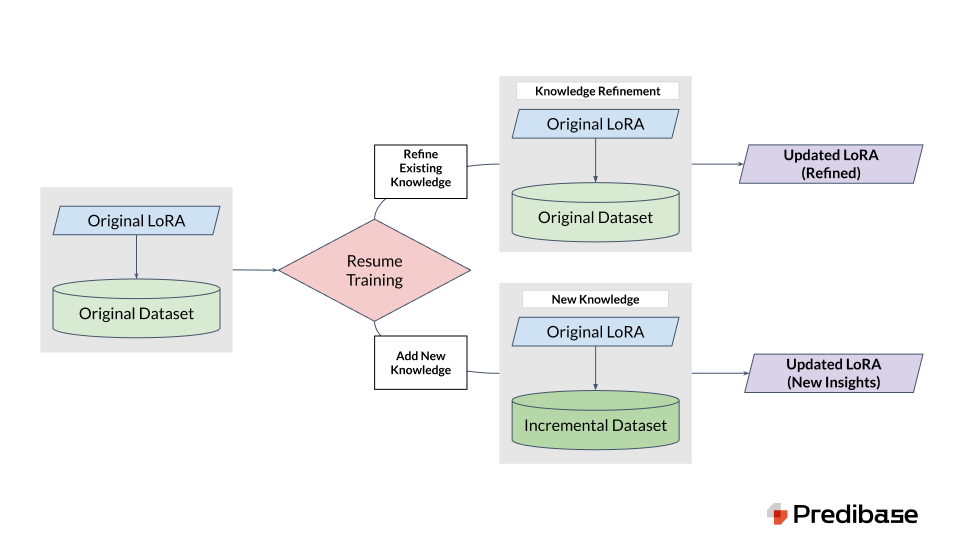

Continued Fine-Tuning

We recently added support for continuous training, allowing teams to retrain models at a cadence that matches the pace of data change in their production environment. This rapid iteration cycle is vital for integrating new insights and adapting to user behavior changes, significantly enhancing model performance and relevance.

However, continuous retraining can be resource-intensive, especially as data volumes grow. To address this, we've implemented incremental fine-tuning, which updates models with new data without the need for full retraining. This method reduces both the time and cost of model updates. We've also incorporated rehearsal learning techniques to combat challenges like catastrophic forgetting and data misalignment. This approach involves blending new data with a selection of historical data during training updates, ensuring the model remains robust and its learning stable.

These enhancements are designed to make the process of model training and updating as efficient and effective as possible, helping our customers keep their models at the cutting edge without the overhead typically associated with continuous retraining. With these new features, Predibase is making it easier than ever for AI teams to maintain the accuracy and relevancy of their models in fast-evolving operational environments.

Refine existing knowledge or incorporate new knowledge through continued training.

Request Logging for Enhanced Production Insights

Predibase has upgraded its platform with a new request logging feature, providing users with detailed insights into their models' interactions by capturing comprehensive logs of prompts and responses. This tool is essential for monitoring performance, refining model behavior, and maintaining transparency through a clear audit trail.

Ongoing Improvements to Model Speed and Quality: The request logging enables users to quickly identify patterns and assess model accuracy. Teams can easily find where the model makes mistakes and then re-train the model with corrected responses to improve the model.

Informed Decision-Making: Detailed request logs help teams make better decisions regarding model updates and iterations. This data-driven approach supports strategic planning and enhances operational efficiency.

This enhancement underscores Predibase’s commitment to delivering transparent, high-quality AI solutions that empower users to improve the reliability and effectiveness of their applications.

Fine-Tuning for Function Calling

We’re excited to announce support for fine-tuning LLMs for function calling, a feature that enables models to produce structured, schema-compliant outputs like JSON or API calls instead of freeform text. Function calling, a core capability of Agentic AI systems, allows LLMs to extract relevant parameters from user inputs and format them into predefined structures, making them immediately usable by downstream systems like APIs or databases. This functionality bridges the gap between user queries and machine-executable actions, ensuring smooth automation and integration.

Fine-tuning for function calling enhances these capabilities by training the model to better adhere to specific schemas, extract parameters with higher accuracy, and tailor outputs to proprietary workflows or domain-specific APIs. This is critical for enabling Agentic AI to operate reliably in real-world scenarios, improving robustness by handling ambiguous inputs or missing information gracefully, such as by generating error messages or fallback responses. These improvements minimize disruptions and ensure consistent performance across complex workflows.

By fine-tuning for function calling, organizations can simplify workflows and reduce post-processing, making integration with existing systems faster and more efficient. Whether automating complex processes or powering domain-specific APIs, this feature advances the promise of Agentic AI—AI that not only understands user intent but also takes structured, actionable steps to execute it. This allows businesses to streamline operations and unlock new opportunities for AI-driven solutions.

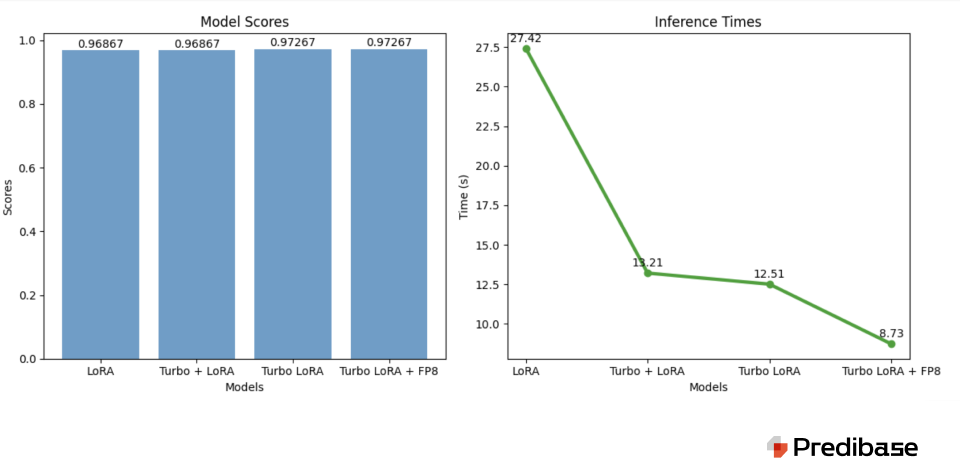

Accelerate existing LoRAs with Turbo

In our continuous effort to enhance model performance, Predibase now offers the ability to accelerate your existing LoRA models using our Turbo feature. This innovative capability allows users to upgrade their LoRAs—whether trained within our platform or externally—and significantly boost throughput without impacting model accuracy. Additionally, users can fine-tune their models with Turbo LoRA directly on Predibase for the highest quality and throughput improvements of about 4x when paired with FP8 quantization.

Turbo Training from Your Own LoRA:

- Train a Custom Speculator: Start by training a custom speculator which acts to enhance your existing LoRA's efficiency.

- 2x Speed Enhancements: By converting your standard LoRA to a Turbo, you effectively increase its throughput while maintaining the exact same output.

- Small Size Enables Multi-LoRA Serving: Turbo speculators are designed to be compact (10s of MB), making them perfectly suited for multi-LoRA serving from a single GPU with LoRAX, our multi-LoRA serving framework.

- Iterative Improvement: If you have a LoRA that was initially trained outside of Predibase and you're looking to improve its speed, Turbo makes this simple. Additionally, as you collect more data, you can continue to iteratively train and enhance your Turbo, ensuring it becomes faster and more efficient over time.

This feature is particularly beneficial for users who need to scale their applications and require faster response times from their models without sacrificing the reliability of the results. By enabling Turbo on your LoRAs, Predibase helps streamline your operations, allowing for quicker computations and more efficient data handling across various AI-driven tasks.

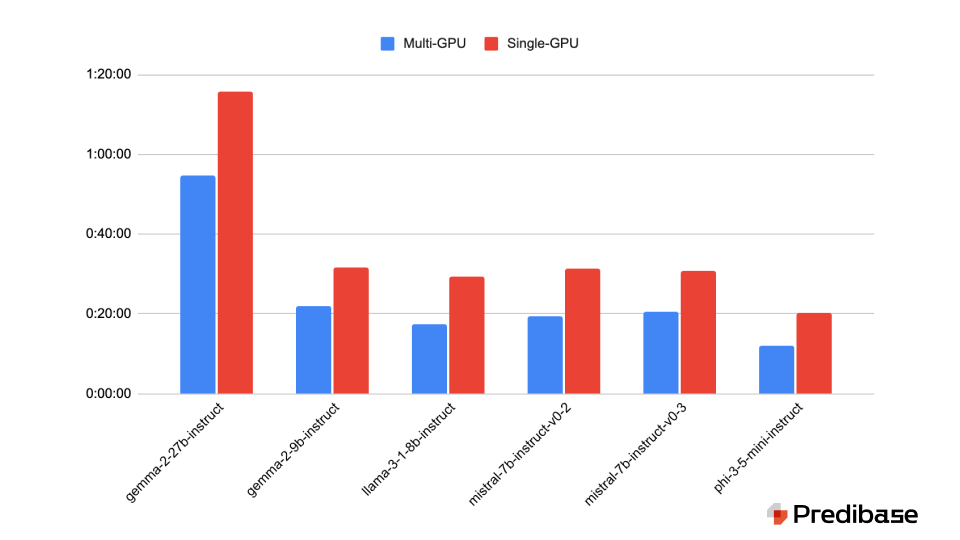

Multi-GPU fine-tuning

We recently introduced the ability to use multiple GPUs for a single fine-tuning job. This advancement allows for the processing of substantial datasets that traditional setups or open-source tools would struggle to handle efficiently. By supporting simultaneous operations across multiple GPUs, Predibase significantly cuts down training times, enabling models to learn from larger volumes of data more quickly than ever before. This multi-GPU strategy is designed to optimize resource usage and accelerate model training processes, which is crucial for applications requiring vast datasets.

Larger models see a consistent speedup on a 17K example dataset (3 epochs, H100 GPUs).

More from the archives

If you’re looking for a few more stocking stuffers to read up on, check out some of our earlier announcements from 2024!

- LoRA Land: Fine-Tuned Open-Source LLMs that Outperform GPT-4

- How we accelerated fine-tuning by 15x in less than 15 days

- LoRAX + Outlines: Better JSON Extraction with Structured Generation and LoRA

- Introduced support for completions style fine-tuning (beta) and chat fine-tuning (beta) in addition to instruction fine-tuning

- How to Generate Synthetic Data and Fine-tune a SLM that Beats GPT-4o

Looking Forward to 2025

As we look toward 2025, we are excited to further enhance our model support and introduce new training strategies that respond to our users' evolving needs. We remain committed to improving our platform’s usability, security, and performance, ensuring that Predibase continues to lead in the fine-tuning technology space.