Advanced reasoning models like DeepSeek-R1 are pushing the boundaries of AI’s ability to handle complex problem-solving. These models can reason through intricate logic, generate step-by-step mathematical solutions, and provide explainable outputs—making them incredibly powerful for high-stakes decision-making.

However, this advanced reasoning comes at a cost: slow throughput. Reasoning models "think" by generating a lot of tokens, and generating tokens is inherently slow. Unlike traditional LLMs that generate responses based purely on statistical predictions, reasoning models like DeepSeek-R1 decompose problems into structured steps, following a logical "chain of thought." This means multiple intermediate computations are required, further increasing the overall processing time.

As a result, the combination of structured reasoning, longer outputs, and iterative thinking significantly reduces inference speed, making these models less practical for real-time applications like customer service chatbots or interactive AI assistants.

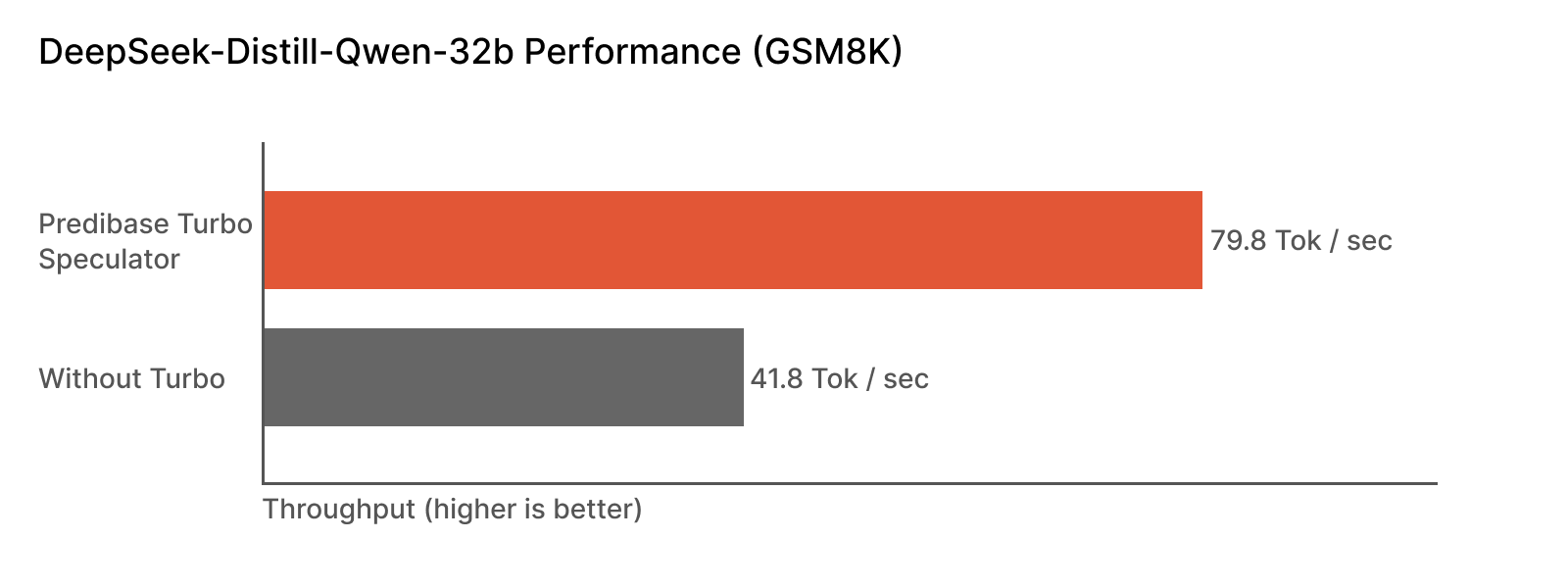

Last year, we introduced Turbo LoRA and Turbo Speculation, a novel parameter-efficient method for fine-tuning LLMs for faster inference. With Turbo Speculation, we can improve per-request throughput for reasoning models by 2x.

This blog explores how Predibase Turbo addresses these challenges, unlocking faster inference speeds without sacrificing model quality.

The Power and Pitfalls of Reasoning Models

Why Are Reasoning Models Important?

Models like DeepSeek-R1 and OpenAI’s o1 excel in:

- Mathematical Problem Solving – These models can show their work, providing detailed reasoning behind calculations rather than just giving an answer.

- Code Generation and Debugging – They understand the structure and intent of code, making them invaluable for AI-assisted programming.

- Logical and Multi-Step Reasoning – Unlike simpler models that rely on pattern matching, reasoning models explain their decisions with step-by-step logic.

The Throughput Bottleneck

The problem? These reasoning traces drastically increase the number of tokens generated per response and the chain-of-thought process further slows down the model speed. While this improves transparency and interpretability, it slows down inference, making these models less viable for real-time use cases.

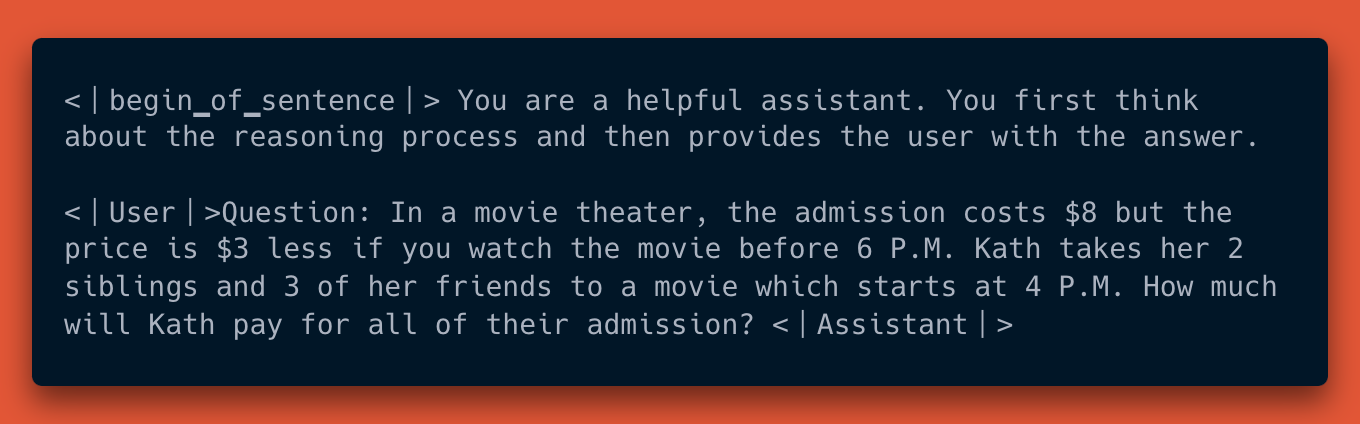

Let's look at a real example showing how reasoning models generate tokens and where Turbo can help. Here's a simple math problem about movie ticket prices:

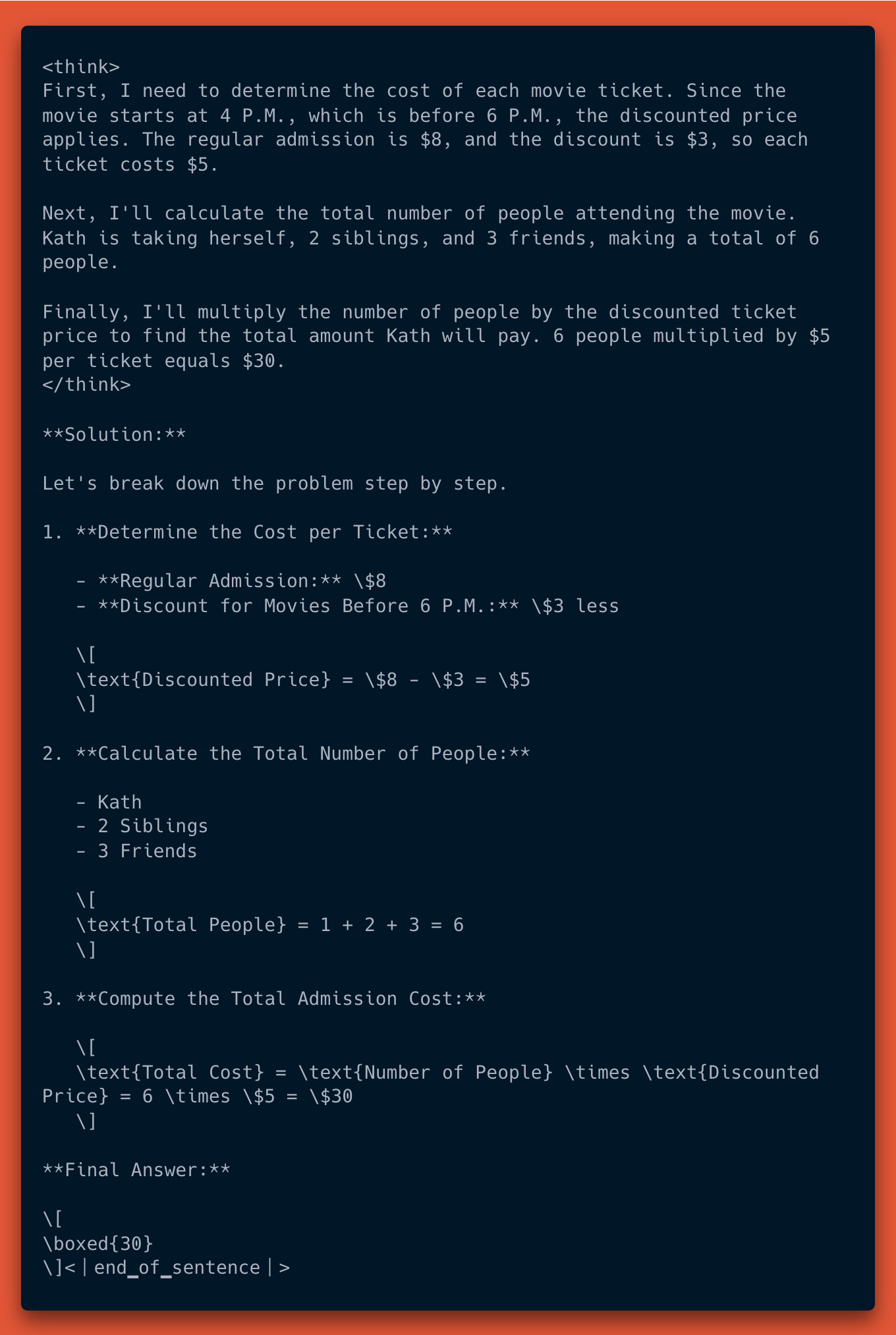

Response:

This response generated 344 tokens - much more than a simple "The total cost is $30" would require. But look at the patterns in how these tokens are generated:

1. The thinking process follows a predictable structure:

- Setting up the problem ("First, I need to...")

- Breaking down the components (ticket cost, number of people)

- Mathematical operations

- Final conclusion

2. The mathematical notation is highly structured:

- LaTeX formatting tokens ([, ], \text{})

- Common equation patterns ($ signs, arithmetic symbols)

- Repeated formatting elements (**, -, newlines)

When we enabled Turbo speculation on this example, we saw a 50% reduction in inference time. This speedup comes from Turbo learning to predict these common patterns - for instance, after seeing "[" it can confidently predict "\text{" will appear soon, or after "" it knows another "" will be needed to close the bold formatting.

This ability to predict formatting, mathematical notation, and reasoning structures allows Turbo to generate multiple tokens in parallel while maintaining the exact same output quality. The more structured the reasoning, the more opportunities there are for Turbo to make accurate predictions about upcoming tokens.

Why Complex Reasoning Models Slow Down Inference

1. Increased Token Generation

Because models like DeepSeek-R1 include step-by-step reasoning in their responses, they generate far more tokens than models that provide simple, direct answers. More tokens = slower response times.

2. Computational Complexity

Reasoning models require more forward passes per token, making them computationally expensive to serve. Even with high-end GPUs, these models struggle to maintain low-latency responses.

3. Iterative Processes

Tasks like debugging code or solving math problems may require the model to "think" iteratively, revisiting and refining its outputs, which further increases computational load.

Introducing Predibase Turbo: Unlocking Faster Throughput

At Predibase, we developed Turbo to accelerate inference using an advanced technique called speculative decoding.

Instead of generating one token at a time, speculative decoding predicts multiple tokens in parallel and then verifies them before finalizing the output. Any "guessed" tokens that are inconsistent with the original model are discarded, ensuring there is zero difference in the final response. This allows models to maintain high-quality outputs while generating text significantly faster.

How Turbo Works (Simplified)

- A small, fast “speculator” predicts several tokens in parallel.

- The main model verifies them—if correct, they’re instantly used.

- If wrong, only the incorrect tokens are recalculated.

🎯 Result: Instead of waiting for one token at a time, the model generates multiple tokens per step—cutting latency dramatically.

Applying Turbo to DeepSeek-R1

To demonstrate Turbo’s effectiveness, we applied it to DeepSeek-R1-distill-qwen-32b, a distilled version of DeepSeek-R1 that retains strong reasoning capabilities while improving efficiency. By adding Turbo, we saw significant improvements in throughput, making the model far more practical for real-world applications.

Key Benefits of Turbo for Large Reasoning Models

1. Real-Time AI Becomes Feasible Turbo typically drives a 2-3x speedup, making reasoning models viable for applications like:

- AI-powered customer support (instant responses instead of multi-second delays).

- AI copilots for developers that generate and debug code in near real-time.

- Healthcare AI assistants that provide fast, detailed diagnostic support.

2. Lower GPU Costs

- Faster inference means fewer GPUs are needed to handle the same workload.

- Cost savings scale with deployment size.

Benchmarking Turbo’s Performance

How to Apply Turbo to Your Own Models

For teams looking to boost throughput on their own fine-tuned models, Predibase offers an easy way to integrate Turbo.

Step-by-Step Guide

Let's talk a little about self-distillation here. The key idea is the following: we're going to take an input dataset (in this case: GSM8K), and prompt the original DeepSeek-Distill-Qwen-32B model to generate completions for every input. Then, we're going to fine-tune a Turbo Speculator on this self-distillation data so it can learn to predict its own outputs (future looking tokens) more efficiently. To perform the self-distillation, we make use of the new and shiny Predibase Batch Inference API. Let’s walk through the code for this:

```

# 1. load the gsm8k dataset

from datasets import load_dataset

dataset = load_dataset("gsm8k", "main")

# 2. format the questions in the dataset into a model-ready prompt

def get_messages(item):

return [

{

"role": "system",

"content": "You are a helpful assistant. You first think about the reasoning process and then provides the user with the answer."

},

{

"role": "user",

"content": f"Question: {item['question']}"

}

]

# 3. embed the dataset prompts into Predibase Batch Inference API format

def get_batch_inference_row(idx, messages):

return {

"custom_id": idx, # Each row in your dataset must have a unique string ID.

"method": "POST",

"url": "/v1/chat/completions",

"body": {

"model": "", # Empty string means prompt the base model without any adapters applied.

"messages": messages,

"max_tokens": 1000,

"temperature": 0,

}

}

# 4. save the output in a JSON file

import json

with open('./deepseek-r1-distill-qwen-32b-gsm8k-self-distill.jsonl', 'w') as f:

for idx, item in enumerate(dataset['train']):

f.write(f'{json.dumps(get_batch_inference_row(idx, get_messages(item)))}\n')

# 5. upload to predibase

pb.datasets.from_file('./deepseek-r1-distill-qwen-32b-gsm8k-self-distill.jsonl', name='ds-r1-qwen-self-distill')

# 6. create deployment config for batch api inference

config = BatchInferenceServerConfig(

base_model="deepseek-ai/DeepSeek-R1-Distill-Qwen-32B",

accelerator="a100_80gb_100",

)

# 7. submit the job

job = pb.beta.batch_inference.create(

dataset='ds-r1-qwen-self-distill',

server_config=config,

)

# 8. save the output

pb.beta.batch_inference.download_results(job, dest=“./deepseek-r1-distill-qwen-32b-gsm8k-self-distill-completions.jsonl”)

# 9. upload to Predibase for fine-tuning

pb.datasets.from_file('./deepseek-r1-distill-qwen-32b-gsm8k-self-distill-completions.jsonl', name='ds-r1-qwen-self-distill-completions’)

```That’s it! You are ready to train your Turbo speculators. See https://docs.predibase.com/user-guide/fine-tuning/turbo_lora for steps on how to do that.

Conclusion

Let's return to our movie ticket problem from earlier. When we deployed the model with and without Turbo enhancement, we saw striking differences:

- Original model: 10.31 seconds

- Turbo-enhanced model: 5.61 seconds

This 50% speedup is typical of what you can expect when deploying Turbo-enhanced reasoning models in production. The more structured reasoning your use case requires, the more opportunities Turbo has to accelerate token generation.

To evaluate Turbo's impact on your specific workload:

- Deploy both standard and Turbo-enhanced versions of your model

- Measure token generation speed, latency, and throughput

- Monitor resource utilization to quantify cost savings

- Test with your actual production traffic patterns

These measurements will help you quantify both the performance gains and cost benefits of using Turbo in your specific application.

The trade-off between reasoning quality and throughput has long been a challenge in AI model serving. Predibase Turbo eliminates this bottleneck, making it possible to deploy complex reasoning models at production speed.

Ready to speed up your AI? Get started with Predibase today or request a demo

Frequently Asked Questions

What is self-distillation in AI models?

Self-distillation is a technique where a model learns to mimic its own outputs more efficiently. In our approach, we prompt the original DeepSeek-R1-Distill-Qwen-32B model to generate completions on a dataset (like GSM8K), then fine-tune a smaller, faster "Turbo Speculator" model to predict these outputs. This enables the speculator to learn patterns in the larger model's reasoning process, making token prediction more efficient without sacrificing quality.

How does Turbo Speculation accelerate reasoning in AI models?

Turbo Speculation uses a pattern-aware approach to generate multiple tokens in parallel instead of sequentially. It identifies predictable structures in reasoning outputs—such as mathematical notation, formatting patterns, and logical steps—and generates these tokens simultaneously. The main model then verifies these predictions, keeping correct ones and only recalculating when necessary. This parallel processing can achieve up to 2x faster inference without changing the final output.

Why do reasoning models like DeepSeek-R1 have slower inference speeds?

Reasoning models like DeepSeek-R1 are slower for three main reasons:

- They generate substantially more tokens per response due to step-by-step reasoning

- Their complex architecture requires more computational resources per token

- The iterative nature of problem-solving requires additional processing cycles

For example, a simple math problem might generate 344 tokens with detailed reasoning when a direct answer would only need 5-10 tokens. This thoroughness is valuable for accuracy but creates throughput challenges.

How can Turbo Speculation reduce inference time for AI models?

Turbo Speculation reduces inference time by:

- Predicting multiple tokens simultaneously instead of one at a time

- Learning domain-specific patterns that appear in model outputs

- Prioritizing predictable sequences (like formatting elements and standard notation)

- Avoiding redundant calculations through efficient verification

- Specializing to specific tasks through fine-tuning

Our testing shows a a 2x speedup for specialized tasks.

What are hardware requirements for DeepSeek Models?

DeepSeek-R1 models typically require high-end GPUs with significant VRAM. The DeepSeek-R1-Distill-Qwen-32B model we used in our testing runs optimally on A100 and H100 GPUs. With Turbo Speculation enabled, you can either:

- Achieve faster inference on the same hardware

- Maintain similar speeds on less powerful hardware

- Handle higher throughput with the same infrastructure

This flexibility helps organizations optimize their GPU resources based on their specific performance and cost requirements. Learn how to deploy DeepSeek on Predibase!

How does GPU performance impact AI model inference speed?

GPU performance directly impacts inference speed in several ways:

- VRAM capacity determines how much of the model can be loaded at once

- Compute capacity affects how quickly tokens can be processed

- Memory bandwidth influences data transfer speeds during processing

- Parallelization capabilities determine how many operations can run simultaneously

With Turbo Speculation, we can better utilize available GPU resources by parallelizing token generation, reducing the number of sequential operations needed, and making more efficient use of computational resources.

If you're curious how AI can be trained to generate high-performance GPU code, check out our deep dive on reinforcement fine-tuning for AI-driven GPU optimization.

How to accelerate advanced reasoning models without sacrificing accuracy?

You can learn about smarter fine-tuning and how to double inference speed - check out this webinar.